Unlocking the potential of AI tools with UX research

Three key opportunities where research can enhance the user experience

Artificial intelligence (AI) tools are having a moment.

In the last year, OpenAI has released several impressive products such as DALL-E 2, a natural language art generator, and ChatGPT, a do-it-all chatbot — and the company is now raising billions in funding during an economic downturn. Similarly, Gartner and McKinsey analysts tracking adoption and usage across the private sector have noted a surge in AI investment.

We can expect this technology to play a significant role in our lives and work in the coming years. But what role can UX researchers play in its development?

Common types of artificial intelligence tools

According to the Oxford English Dictionary, AI refers to “computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.”

But what are some typical examples?

Recommendation engines: These algorithms learn from user data to suggest relevant content, products, or services. Everyday examples include e-commerce like Amazon.com, streaming platforms like Netflix, or social media feeds like Twitter.

Chatbots and virtual assistants: These tools process human language and simulate conversation for various tasks. Everyday examples include Alexa, Siri, and customer support chatbots.

Computer vision: These tools analyze images and videos to detect and recognize objects and faces. Everyday examples may be found on social media, photo sharing apps, and home security devices.

Generative AI: These tools generate content based on a “prompt” or input. Examples include art generators like DALL-E 2, text models like ChatGPT, and hybrid tools like Google Duplex.

In short, AI is a broad label that can refer to many different tools, or the underlying technology powering those tools. Some are highly technical and specialized, while others, like those mentioned above, are consumer-facing. In the latter case, users may or may not be aware of the AI behind the tool.

Across these tools, three key opportunities stand out as areas for UX research.

1. Help users form appropriate mental models

When the Nest Thermostat was released in 2011, it was praised for its fresh and intuitive design. No small part of that is how effectively it conveys an accurate mental model of how household thermostats actually work as compared with traditional ones.

The classic thermostat gives few clues about its mechanism. And many assume, wrongly, that it works like a bathroom faucet: i.e., get hotter temperatures by cranking it further in that direction, and vice versa. But in fact, they work like switches, turning on when a target temperature isn’t met and off once it gets there.

The Nest interface shares this simply and elegantly. And within a few short years, their founders sold the company to Google for over $3 billion! That’s the potential of an appropriate mental model.

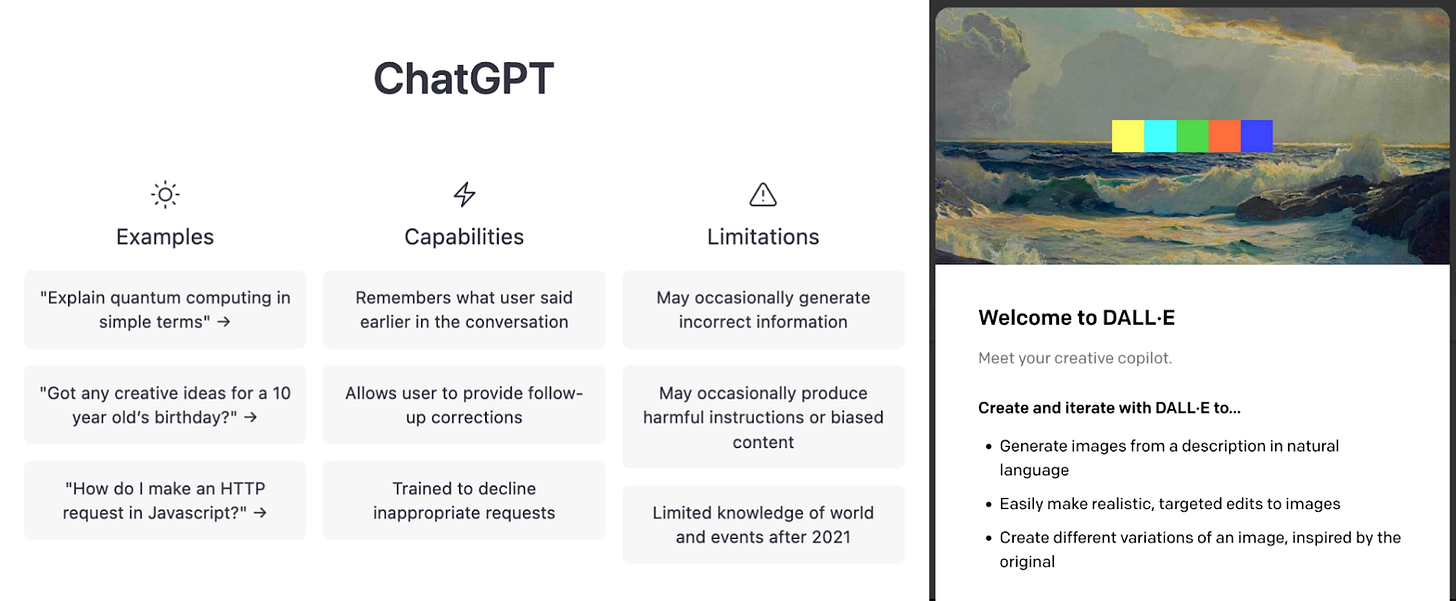

Meanwhile, many AI tools today share little information about how they work. Compare these introductory splash pages for ChatGPT and DALL-E 2:

Both platforms briefly describe their capabilities and give some example prompts (DALL-E’s not pictured here). Only ChatGPT describes its limitations in any detail — so novice DALL-E users may be surprised when the tool bungles faces, fingers, words, and relationships.

But neither talks about the mechanism. And more important than knowing what a tool can and can’t do is having some approximate understanding of how it does things and why it can’t do others.

Like many AI tools, both were trained to derive relationships between variables in large datasets, so they have no abstract understanding of the underlying concepts. ChatGPT, for example, was trained on millions of pieces of writing to learn when words are most likely to follow each other in a given context — a kind of “autocomplete on steroids.” Notably, one user inadvertently stumbled into that mental model by asking the tool to write in ciphers.

By understanding how tools work, users can avoid undesirable outcomes and achieve more desirable ones. UX research can help elicit current mental models, and test the models conveyed by new design concepts.

2. Help users trust output to be safe and reliable

We often think of AI as a neutral tool.

But the truth is, it’s fundamentally shaped by the biases of the people who create it and the data it's trained on. And if we don't address these biases, we risk undermining trust in AI’s outputs, or worse: creating a world where our tools entrench unjust social dynamics.

Facial recognition technology, for example, is less accurate for people with darker skin tones, leading to potential bias in law enforcement. And there’s a long history of language models being prematurely pulled from public release after they presented objectionable responses with harmful stereotypes.

But it's not just about bias. AI tools are also known to "hallucinate," or, as Meta defined the term, make confident statements that aren’t true. This can take many forms, including non-existent citations or inaccurate math.

Both bias and hallucinations can have a significant impact on the ability of AI tools to be useful in critical applications such as medicine, law, education, or research.

One possible solution comes from the principle of explainable AI. Another, suggested by Gavin Lew and Bob Schumacher of Bold Insight, is to allow folks trained in the nuances of technology and social science (like UX researchers) to collect the training data in a clean and principled way.

By providing transparency into the workings of AI systems, we can build trust in their outputs and promote their responsible use.

3. Help users collaborate with the tool

The first output from an AI tool isn’t always the best.

However, results can improve when human and artificial intelligences collaborate together. Hence many of the most effective recommendation engines take user feedback into account, instead of relying solely on behavioral data. Similarly, users creating artwork with generative tools will often iteratively refine their prompts to refine the image.

Humans working closely with AI systems often perform better than humans or computers alone.

Take freestyle chess tournaments, where teams can have any number of human and/or computer players. During one such tournament in 2005, several grandmasters combined their experience and computer aid to reach the finals. The surprise came at the very end, when a team of complete amateurs pulled out the victory. They had trained more extensively with their chosen chess engines, and entertained a wider variety of AI-suggested scenarios — at times, selecting inferior moves for a larger strategy.

These collaborative skills will set candidates apart in the job market, which is encouraging news for people whose first concern with these tools is job security. And UX research can indicate effective ways to help users do this.

The key is to learn how to work effectively with AI, rather than relying solely on it or fearing it.

Summary

Artificial intelligence tools—whether recommendation engines, chatbots, computer vision, or generative AI—offer incredible potential. But without proper UX research, that value will remain limited.

In this article, we looked at three opportunities where researchers can contribute:

Mental models: Currently, few users understand the mechanism behind AI tools. And tools that convey accurate mental models about what they can and can’t do are more usable.

Trust and reliability: AI tools reflect the bias of their creators and training sets, and sometimes confidently give false information. UX researchers can help collect better training data at scale, and explore ways to make the tool’s reasoning more transparent.

Effective collaboration: Users may avoid AI tools from fear or come to rely exclusively on them. Neither approach is ideal. UX researchers can seek ways to promote a partnership between the AI and user, which often produces the best outcomes.

Improvements in these areas may help overcome user resistance and other barriers to adoption. And they may also help users achieve better results from tools that would be otherwise technically equivalent. Ultimately, AI tools with the best user experience will have an edge in a highly competitive space.

Further reading and resources

If you want to apply UXR to AI tools…

As a primer, I highly recommend Lew and Schumacher’s book, AI and UX (2020).

I haven’t yet read Ben Shneiderman’s 2022 book on Human-Centered AI, but it’s on my list. An extract is available.

There are also various guidelines and heuristics for designing and/or researching AI tools:

Gary Marcus is a veteran research scientist in the field whose perspective on the limits of current tools I find thought-provoking.

See: How come GPT can seem so brilliant one minute and so breathtakingly dumb the next? from his Substack newsletter.

Alternatively, if you want to apply AI tools to UXR…

ICYMI: Can ChatGPT write a proper usability test script? I put ChatGPT to the test and asked it to prepare a moderator’s guide. While the results were impressive, they still missed the mark. I explore some possibilities for its use in our work.

Fascinating Lawton! I've also recently written on this topic, if you're interested in a read? https://bootcamp.uxdesign.cc/ai-or-die-ushering-in-a-new-era-of-user-research-1d4ce89a9660

Also, another tool for the list - validly.app : AI-led user interviews!